An Introduction to Ten15

- A

personal retrospective.

Martin C. Atkins,

Mission Critical Applications Limited.

The Ten15 system was developed by

Michael J Foster,

Ian F Currie,

Philip W Core,

et al, at the

Royal Signals and Radar Establishment (RSRE, then DERA, now

QinetiQ) at Malvern in the U.K.

The project was active from around 1987 to 1992, and made many

breakthroughs in the areas of virtual machines, polymorphic

type systems, persistence, and distributed system design that

are still not generally known, and in some cases have not, to

my knowledge, even been re-discovered! With the increasing

popularity of virtual machine architectures, such as the JVM

and .NET, these ideas are more relevant than ever.

These pages are an attempt to publicise this work (better

late than never!). Although I did work on the project at the

University of York from 1988 until 1992, none of the ideas

described here are my own (unless explicitly stated otherwise),

and there might be inaccuracies caused by my never having been

on the core team, and my poor memory. I have tried to be as

precise as I can, but I apologise in advance for any

inaccuracies that might have crept in.

The name "Ten15" is (somewhat confusingly) used at different

times to refer to the Ten15 system, the Ten15 language (usually

called Ten15 Notation), and the Ten15 intermediate

representation for programs. Terms which I introduce below for

clarity, but are not the usual Ten15 (or Flex) nomenclature,

will be "in quotes".

Background

Ten15 was an attempt to make something like the

RSRE Flex environment available on modern systems. This has no

relationship with the Unix-like embedded operating system, also

called Flex, but

rather the capability system that RSRE built by replacing the

microcode in the

Three Rivers Computer Corporation (3RCC)

PERQ,

(see also here,

and here).

J. Gordon Hughes, CC BY-SA 3.0, via Wikimedia Commons

This in turn was an upgrade of the Flex mainframe, also

designed by the same group at RSRE, and built for them by Logica.

My understanding was that (a derivative of?) the Flex mainframe was deployed in a secure communications processor for the UK MOD.

But the relationship with

Ptarmigan

(a battlefield wide area network communications system for the British Army), which used the

Plessy System 250, is unclear.

Levy (below) claims the Plessy System 250 was both the first operational capability hardware system, and the first capability system sold commercially.

It was designed for a Mean Time Between Failure of 50 years! (see Chapter 4 of Levy's book)

Capability Architectures

Flex was a capability architecture and operating system with

a graphical user interface, unlike any other computer system

I've ever heard of! The capability architecture meant that

programs did not run in a flat memory space, as we take for

granted these days. Instead a program consisted of a number of

memory objects, which in Flex could range in size from one

integer, to the whole of physical memory. The memory objects

are referred to by "pointers", and it is these pointers that

are called capabilities. However capabilities were much

more than just pointers. It is impossible to create (or forge)

a capability without asking the system for a new object, and it

is impossible to access memory that was not pointed to by one

of the capabilities currently 'owned' by the program. Thus

capabilities formed the basis of the protection mechanism that

allowed concurrent programs, possibly owned by different users,

to execute without interferring with each other. Permissions

were also associated with capabilities, so that a memory object

could be defined to contain executable code, and another could

contain an array, say. Some systems, Flex included, also used capabilities to

refer to data stored on the disk.

Capability architectures are described in much more detail

in this book by Henry M. Levy,

which is out-of-print, and hence can

be downloaded in full! Other more modern-ish systems using

capability architectures were Key Logic's

KeyKOS, and the

Extremely Reliable Operating System,

Eros - both so reliable that their web sites have to be accessed on the waybackmachine!

(Wikipedia pages are at

KeyKOS and

EROS).

CapROS, derived from EROS has both a homepage

here and a Wikipedia page

here, but

Coyotos is again on the waybackmachine.

All a bit of a graveyard!

In 2024, perhaps the best-known actively developing operating system based on capabilities is the

seL4 micro-kernel, which is still the subject of research,

while increasingly seeing deployment, and use as the micro-kernel at the heart of larger operating systems, such as

Kry10.

Interest is also blossoming on the hardware front, with

CHERI from Cambridge,

which is the foundation technology of the

DSbD initiative with the

Morello processor from Arm, and its little brother,

Cheriot.

Flex

In Flex, capabilities were visible in the user interface,

and could be mixed freely with normal text stored in a "text

object", or "Edfile". In fact, a "text object" was simply a capability to an

array of lines; each line was an array of "glyphs"; and each

"glyph" was either an array of characters, or a capability

(defining glyphs simply as "a character or a capability" would

have been more logical and simpler, but the overheads were

prohibitive). Capabilities, including "text objects", could be

stored as capabilities in other text objects, or assigned to a

string name, and stored in a "directory".

The user interface was essentially just a text editor for

"text objects", but it also allowed embedded capabilities to be

manipulated by cut/copy/paste, and other special operations. A

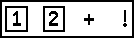

capability was displayed as as a cartouche, a box with

text inside. Evaluating a command line would replace it with

the capability containing the value, and the cartouche for the

capability would contain the evaluated text. Since this usually

included other cartouches, the display could quickly start to

look something like this:

The exclaimation mark, '!', means 'apply', and expressions

are entered postfix, so this applies '+' to two parameters, the

results of evaluating '1', and '2'. Presumeably the enclosing

cartouche's value is 3! Actually the cartouches' values are

capabilities to the integer values '1, '2', and '3', rather

than the values themselves, and so the type of '+' is something

like:

'+': ptr int × ptr int -> ptr int

rather than

'+': int × int -> int

as one might expect, but this user interface was not really

designed to be a pocket calculator!

The compilers were (loosely speaking) just functions from

"text objects" to module values, and a module value was just a

record containing the interface specification, and compiled

data and function values. There were no such things as

include files; if a program needed to link with a

library, then (a capability to) the library's module value was

simply inserted into the persistent "text object" holding the

program's source. The compiler would pick up the library's

interface specification from the module, and the module value

itself would be included in the code output by the compiler. At

run-time, the program (or the linker) could link to the

functions in the library's executable, also taken from the same

module value. Module values were updateable, so that a module

could be re-compiled, and as long as the interface

specification had not changed, all programs with references to

the module value would transparently start to use the new

version.

Flex was usually programmed in Algol-68 (Ian Currie was on

one of the Algol-68 committees - I'm not sure of the details),

with the extension that procedures could (safely) escape from

their scope - in modern language, this meant that functions

were first-class values - indeed, in Flex the result of a

program could be a function! Not the text for a

function, or even the binary implementing the function, but a

capability to the function closure value, complete with

bindings to all the variables in its non-local scope. I think

there was also an experimental Ada compiler.

In order to support this, Flex's main memory had to be

garbage-collected. Flex also supported capabilities to

objects on the disk and to remote objects accessible over the

network. In fact, the Flex disk was also garbage collected - an

off-line operation that used to take a couple of hours for the

20Mbyte(?) PERQ disks. This was, by the way, ample space for the complete

Flex system, including all the source!

Flex was designed to be an integrated program development

environment, but a variant was deployed for at least one

embedded application.

The motivation for Ten15

With modern microprocessor-based systems (here we are

talking about Micro VAX, Sun 3, Sun 4, 386-based PCs, etc., but

the conclusion has not changed!) microcoding a capability

architecture was no longer possible. So it began to look

increasingly like the Flex system would die with the PERQ. In

order to avoid this a radical new approach was needed, and

Ten15 was invented. Folklore has it that the idea for Ten15 was

proposed at 10:15 in the morning, and hence the name! Perhaps

it's a good thing that the designers didn't work for an

advertising company ;-)

Ten15 took a virtual machine approach to portability, but

this did not automatically solve the problem of enforcing the

capability architecture, without making every access to a

capability go though a permission check - an approach that

would have had horrible performance implications. Instead it

was decided to make everything in the virtual machine

strongly-typed, and to use the type-checking to enforce the

permission constraints.

For example, in Flex each word in memory was tagged so that

capabilities could be distinguished (by the hardware/microcode)

from other data. These tags were not directly accessible to

normal instructions, and so it was not possible to forge a

capability to access memory that the program had not been

explicitly given the right to access. In Ten15, the compiler

knew the type of every word of memory directly accessible to

the program at each point in its execution. Thus, if the

program currently held the base address of a record, and

attempted to use the 5th member, say, as a pointer to an

integer, then the compiler would know if this field really did

contain a valid pointer to an integer, or whether it was some

other data, and the programmer was (intentionally, or

unintentionally) trying to access something he had no right to

access.

This is no different from any strongly-typed language,

however in Ten15 the type system was enforced uniformly across

every data item in the system - there was no way around the

type system visible to the user - and thus the type system

(together with run-time bounds checking, and garbage

collection) actually became the equivalent, as far as security

and safety was concerned, of the virtual memory and dynamic address

translation mechanisms in conventional systems.

In retrospect, writing an emulator for the Flex/PERQ

capability machine would probably have achieved the project's

goals more quickly, and certainly by today the performance

would have been adequate - but such is the benefit of

hindsight, and perhaps the performance would not have been good

enough, quickly enough to be really useful!

Ten15's virtual machine

Of course, virtual machines were already old-hat in 1987 -

Smalltalk-80 had been around since, well, 1980, but at that

time processors were still so slow that these kinds of virtual

machines had a very bad reputation for performance. Thus Ten15

also took a different route here - using something more like

Just-In-Time compilation.

Like Microsoft's .NET framework,

Ten15's virtual machine was intended to support the

interworking of a number of high-level languages. However,

unlike .NET, the virtual machine wasn't designed from the union

of all the features in all the languages that were of interest,

rather it was an evaluator for a strongly-typed higher-order

eager-evaluation lambda calculus, represented by a parse tree,

which by its generality could automatically support any typed

imperative language up to, and well beyond,

Standard ML,

and anything that could be mapped into any of these.

Another way of looking at this is that Ten15 was an idealized

abstraction of high-level languages, whereas other virtual

machines have usually been idealized abstractions of

assembly-level languages.

As we have seen, the really unique design decision in Ten15

was for all the operations in the virtual machine to be

strongly-typed, and to use strong-typing to provide the

security that Flex had got by using micro-code enforced

capabilities. This meant that the type system had to be very

powerful, otherwise it would limit the languages that could be

supported. Ten15's type system included first-class function

types, explicit bounded universal and existential polymorphism,

with subtypes and range types. The only other languages (known

to the author) that have approached this in expressive power

are

Cardelli's

F<: and Quest, and some

unimplemented 'paper' languages, such as 'Fun' used in this

excellent computer surveys paper on type systems:

On understanding types, data abstraction, and polymorphism, by

Cardelli and Wegner.

Ten15 also had a type Type, the type

of values that represent a type at runtime, but this was

usually only needed for the interactive shell, for debugging,

and for implementing run-time typed languages, such as

LISP.

Some novel features of the type system, such as unique

types, and a solution to the problem of typing updateable

values never, to my knowledge, even got published (until now

that is, if you are interested, see my articles below).

Rather than using just-in-time compilation as we now know

it, to speed up the execution of this code, Ten15 did the

compilation as part of the loading process. Thus by the time

you came to run something, it had already been converted to

optimised binary instructions for the actual hardware you were

running on.

Non-main memory types

As we have said, every aspect of Ten15 was strongly-typed.

This included objects stored in the persistent object store (on

disk), and references to objects on remote Ten15 machines,

accessed over the network.

The Ten15 filestore was modelled on that of Flex, with a

mostly non-overwriting structure, and object roots stored in

presistent variables updated using two-phase commit algorithms

from database theory, so that the filestore was never in an

inconsistent state. It was also possible to commit groups of

persistent variables simultaniously, thus providing

transactions, and this was also more efficient (used fewer

writes to disk) than a sequence of single-variable commits. A

three-phase commit algorithm was designed (and, I believe,

implemented), that allowed distributed transactions modifying

multiple filestores accessed over the network. The filestore

was garbage collected, like the Flex filestore.

Of course, there was nothing to stop Ten15 systems from

communicating with non-Ten15 systems using, say TCP/IP, or from

reading non-Ten15 files. The results would still have had a

good, strong type, probably "array of Byte", and would have to

have been parsed into higher-level types. This is no different

from the way that current systems usually operate. The

difference when accessing native Ten15 data was that this

parsing and type recovery was carried out transparently by the

underlying system, and the application only had to deal with

the high-level, parsed representation.

Languages on Ten15

The main language for programming the Ten15 system was the

Ten15 language. This was the closest thing to an assembler

language for Ten15, but was more like an extended Standard ML.

It took very much the role of C# in Microsoft's .NET, being the

language that most readily mapped onto the virtual machine, and

the only language with full access to all the virtual machine's

facilities. Algol-68 was also very important, since most of the

Flex code was written in Algol-68, and an Ada compiler was

being developed. Of course, any language could interwork with

any other, so long as the data being transferred didn't use

facilities (or types) beyond either language's

capabilities.

Unfortunately, the one language Ten15 could not support was

C, since C is not adequately typed, and this - in the era of

C's rising dominance - was a big reason for Ten15's downfall.

Ten15 was seen as being increasingly marginalized by everyone's

desire to run C, and increasingly C++, programs. This is

somewhat ironic given the recent proliferation and popularity

of perl, python, ruby, Java, C#, etc, all of which would have

mapped nicely onto Ten15.

Low-Level Ten15 and TDF

In order to make the porting of Ten15 easier, I suggested (I

was attempting to port Ten15 to the 680X0 at the time!) that a

lower-level, intermediate language be defined. This would be

the un-typed output of the Ten15 compiler, after type-checking

had succeeded, and all the target-independent optimisations had

been applied. In other words, it would be the input to the

target-dependent part of the Ten15 load-time compiler, which

would become the only part of the compiler that would need to

be re-targeted to run Ten15 on a new computer architecture. In

other respects this new intermediate language was much like

normal Ten15 code, being a parse tree of a lambda-calculus-like

language. It became called "low-level Ten15".

It was realised that low-level Ten15, since it was untyped,

could actually be the target of weakly-typed languages, such as

C and C++, and when the Open Software Foundation (OSF)

sent out their request for technologies for ANDF, the

Architecturally-Neutral Distribution Format, low-level Ten15

was renamed TDF (standing for "Ten15 Distribution Format"), and

proposed to the OSF. This bid was eventually successful, with

TDF winning the OSF competition for ANDF, but in the process of

making the application, work on Ten15 proper was halted, and as

far as I know, never resumed.

Since then, TDF has itself had a checkered history. Having

met all the OSF requirements, and winning the ANDF competition,

the take-up of TDF was disappointing. After some years, the

whole toolkit, including an optimizing C/C++ compiler and

installation tools for a number of target platforms, was

renamed "TenDRA", and released as open-source. It is even

available as a Debian

package! Very recently there seems to be a re-newal of interest

with the setting up of TenDRA.org, and new developments

appear to be happening. See TenDRA.org for more information

about the history and past and future development of TDF.

It appears that the TDF acronym was retrospectively

re-defined to mean "TenDRA Disribution Format", despite the

fact that TDF pre-dates TenDRA by at least 9 years. Thus

pushing references to Ten15 further into the mists of

history....

Specific Topics

The Ten15 Type system

The Ten15 run-time system

- Intercalation

- Persistent datastore

References

- The Algebraic Specification of a Target Machine:

Ten15, J M Foster, Chapter 9 in ??(pages 198-225).

Computer Systems Series. Published by Pitman, London,

1989.

- Ten15: An Abstract Machine for Portable

Environments, Ian F. Currie, J. M. Foster, P. W. Core, in

Proceedings of ESEC '87, 1st European Software Engineering

Conference, edited by Howard K. Nichols and Dan Simpson,

Strasbourg, France, September 9-11, 1987. No 289 in the

Lecture Notes in Computer Science series, published by

Springer Verlag, 1987. ISBN 3-540-18712-X.

- Remote Capabilities, J. M. Foster, Ian F. Currie.

The Computer Journal 30(5): 451-457 (1987)

- Validating Microcode Algebraically, J. M. Foster.

The Computer Journal 29(5): 416-422 (1986)

- Some IPSE aspects of the FLEX project, I.F. Currie,

Chapter 6 in Integrated project support environments (pages 76-85),

edited by John McDermid. Published by Peter Peregrinus Ltd, 1985

(ISBN 0 86341 050 2).

This book is the proceedings of

"The Conference on Integrated Project Support Environments"

held at the University of York (U.K.), 10-12 April, 1985.

To be added:

- RSRE reports on Flex and Ten15

- Computer Journal paper

- My Thesis

- Tim Blanchard's Thesis

- did anything else get out?

Links

Copyright 2002, 2003, 2004, 2024 Martin Atkins

This page can be linked to, and unedited copies can be

freely reproduced.

|

|